Neural Order

Neural Order

In this project we investigate the possibility of using neural architectures for linguistic ordering. In particular, we want to apply a standard neural ordering algorithm for the syntactic problem of word ordering in the sentences in a multilingual context.

Surface Realisation (SR) is one of the main tasks involved in Natural Language Generation. SR focuses the final macrostep of the standard NLG pipeline defined by Reiter and Dale (2000), therefore involving the production of producing natural language sentences and longer documents from formal abstract representations. Such input is assumed to come from an external source, such as a macro-planning and micro-planning pipeline, and therefore it will contain all the necessary information to create the final natural language output. Generating a correct and fluent output in a target natural language is the main responsibility of the SR component.

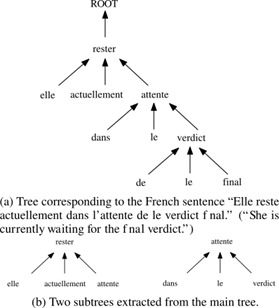

The SR task considers the surface realization of Universal Dependency (UD) trees, i.e., syntactic structures where the words of a sentence are linked by labeled directed arcs.

In particular, UD represents natural language syntax with trees where each node is a word. The labels on the arcs indicate the syntactic relation holding between each word and its dependent words — see an example in the figure.

Our approach to the SR task is based on supervised machine learning. In particular, we subdivide the task into two independent subtasks, namely word order prediction and morphology inflection prediction. Two neural networks with different architectures run on the same input structure, each producing a partial output which is recombined in the final step in order to produce the predicted surface form.

The fitness of the model at each epoch is computed by using the number of incorrect item inversions (intrinsic evaluation), rather than on the downstream task score.

Contacts:

Alessandro Mazzei